-

Find duplicate rows in SQL

Sometimes you need to find and count duplicate data rows in SQL. For example, in my use case I needed to find records in a table where there was more than one usage of the same email address. This would help me figure out how widespread and severe the duplicate issue was; the table in question should not have had duplicate rows based on that column in the first place! (A missing

UNIQUEindex was the culprit).SELECT email, COUNT(*) FROM user_accounts GROUP BY email HAVING COUNT(*) > 1;The

HAVINGclause is the important part of this query. To find duplicates, we need to check if any of the groups have a record count > 1. You can put other conditions for the groups in the HAVING clause as well if required, e.g.COUNT(*) > 1 AND account_status = 1.The result of this query can then be used for a sub query/WHERE clause. The result looks like:

email | count -------------------------------- j.wayne@gmail.com | 2 g.cooper@gmail.com | 3 -

Global rescue_from error in Rails application_controller

In our rails application, we needed a way to raise security access violations based on the user profile and bubble them all the way up to the application controller. We looked into it and found you can use

rescue_fromin your application controller, which allows you to specify an error class and a method inside the controller to call when that error is encountered. For example:class ApplicationController < ActionController::Base rescue_from Errors::SomeCustomErrorClass, with: :handle_error_method def handle_error_method(error) # do some error handling end endIt’s probably not really a good idea to handle the normal ruby

StandardErrorin this way, as that may get you into trouble, but it is perfect for custom errors raised deliberately from within your application! I really like this pattern of nesting an error definition class inside the class that is the one to raise that error. For example, in the result of a security check:class SecurityCheckResult class AuthorizationError < StandardError end def run raise AuthorizationError(message) if check_invalid? end endThen in application controller I could just

rescue_from SecurityCheckResult::AuthorizationErrorto catch this anywhere in my app, and do something like a redirect or a flash. If you need to use this pattern in regular ruby code you can include theActiveSupport::Rescuablemodule. This article has a great example of using the module in regular ruby code (scroll down to the part that mentions RoboDomain). -

Getting nodejs file permissions from fs.stat mode

When you need to get file stats using NodeJS (which calls the unix

statcommand in the background), you can use thefs.statcall as shown below:fs.stat('path/to/file', function (err, stats) { });The

statsobject returned here is an instance offs.Statswhich contains amodeproperty. You can use this property to determine the unix file permissions for the file path provided. The only problem is that thismodeproperty just gives you a number (as referenced in this GitHub issue). To view the permissions in the standard unix octal format (e.g. 0445, 0777 etc) you can use the following code:var unixFilePermissions = '0' + (stats.mode & parseInt('777', 8)).toString(8);Some examples of the

modebefore and after calling the above snippet:33188 -> 0644 33261 -> 0755 -

field_with_errors changes page appearance in Rails

I had a minor issue with my Rails view when I had a list of radio buttons wrapped in labels. When there are form errors on a field like a radio button, Rails puts the CSS class

.field_with_errorson that field. This causes some issues with alignment as seen in the screenshot below:

All you need to do to fix this is make the

.field_with_errorsclass display inline like so:.field_with_errors { display: inline; } -

SOLID Object Oriented Design by Sandi Metz

-

Set Timezone from Terminal OSX

I often have to switch between timezones to test our timezone-sensitive application code. I was getting annoyed at having to open the settings screen in preferences (which is slow) and found out how to do it from the command line.

To set your timezone run:

sudo systemsetup -settimezone timezoneWhere

timezoneis a valid zone from this list:sudo systemsetup -listtimezonesFinally, you can get your current system timezone using:

sudo systemsetup -gettimezoneThis command can easily be made into an alias like so:

settz="sudo systemsetup -settimezone $@"So all you need to do to change your timezone is

settz GMT! -

Invalid Byte Sequence in US-ASCII

After some new code was checked in at work we encountered this issue in our CI as part of the build step to run RubyCritic over our code. I’d never seen it before, and the source of the error was in

buffer.rbof theparsergem library:'source=': invalid byte sequence in US-ASCII (EncodingError)I did some digging and I found that this is where RubyCritic parses each file into an abstract syntax tree for analysis. It seemed like there was a character in the file that could not be parsed correctly, and eventually I found a StackOverflow post that pointed to a tool called iconv that can be used to convert between different character encodings, and that if a conversion is unsuccessful it will throw an error and return code 1. Now this was all well and good but the error I was getting from

buffer.rbdid not tell me the currently erroring file – the best I could do was modify my local gem source to give me a list of the files that passed through the RubyCritic library for analysis.Then, now that I had a list of files, I could run each file through

iconvto check which one had invalid ASCII characters. Of course I am a programmer and thus lazy so I wasn’t going to sit there and run it manually on every damn file, so I just made a ruby script to run it on each file in my list (of which there were hundreds):def run SOURCE_FILES.each do |file| file_path = SOURCE_DIR # source dir is the full path of the root directory puts file_path puts `iconv -f us-ascii #{file_path} > /dev/null; echo $` end endI ran the script and it found the file easily by finding the one that returned 1. Then, all I did to fix the issue was delete the code that had been changed in the previous commit, re-typed it manually, then saved the file. I ran my script again and the issue was solved!

-

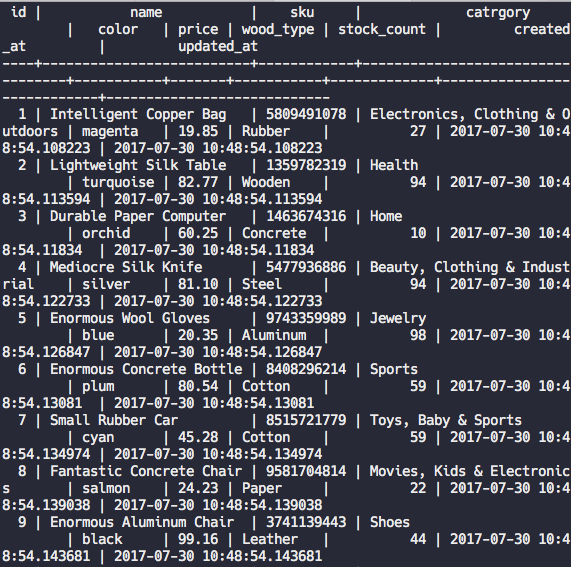

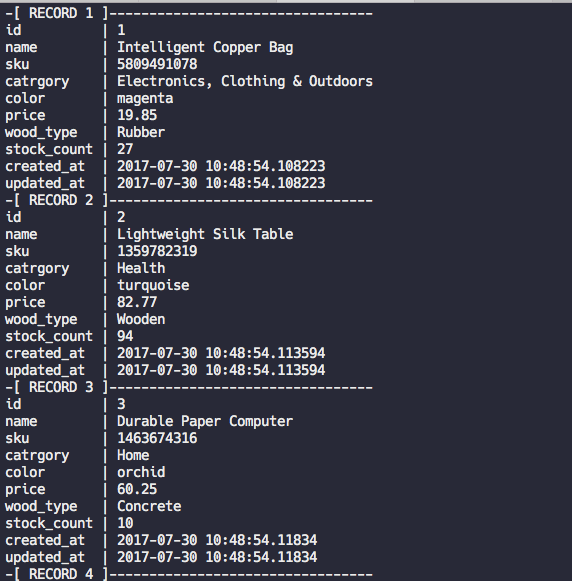

Expanded Output Format for PSQL

If you are using psql you may be getting annoyed that your query results look like this for tables with more than one or two columns:

Well, there is an answer to this problem. Just enter the command

\x onand you will turn on the expanded display option, which makes your query results look like this:

Much better!

-

PhantomJS Element Not Found Using Capybara

I ran into an odd issue this week where one of our Rails feature specs using Capybara was failing because the PhantomJS driver (driven by Poltergeist) couldn’t find an element on the page. This was strange because the same element was tested on a different page and PhantomJS could find it just fine. This occurred even with

$(document).ready(). This is kind of the layout of the page:<script> ViewJS.initialise(); </script> <div id="element-to-find"></div>And the JS we were trying to run:

$(document).ready(function () { document.getElementById('element-to-find'); });Our specs were failing with a JS error saying that element

element-to-findcould not be found. We messed around a bit with somepage.bodycalls to get the HTML of the page during the testing, and everything seemed to be in order. The only different thing about the view was that it had quite a few nested partials and the JS code was at the bottom level of these partials. On a whim, I changed the HTML to look like this, moving the JS call to the bottom of the page:<div id="element-to-find"></div> <script> ViewJS.initialise(); </script>…and it worked! It is, of course, best practice to load JavaScript at the bottom of each page, so I guess we had gotten lucky so far with how quickly the HTML was loading. Though I’m not sure why

$(document).ready()didn’t help in this situation. If you have any insight please let me know! -

Jim Weirich Ruby Talks

I was linked to these two videos by Jim Weirich when I started working with Ruby and Rails again by my friend/colleague/boss and they really helped get into the mindset of working with Ruby in a good, SOLID OOP way. They also gave me a path to not use Rails magic too often, and to decouple logic and classes from the framework as much as possible.

Advanced Ruby Class Design by Jim Weirich

Decoupling Ruby from Rails by Jim Weirich

| << Previous | 2 // 11 | Next >> |

Want to read regular updates? Subscribe via RSS!